In case you’re a young person with entry to OpenAI’s Sora 2, you’ll be able to simply generate AI movies of faculty shootings and different dangerous and disturbing content material — regardless of CEO Sam Altman’s repeated claims that the corporate has instituted sturdy safeguards.

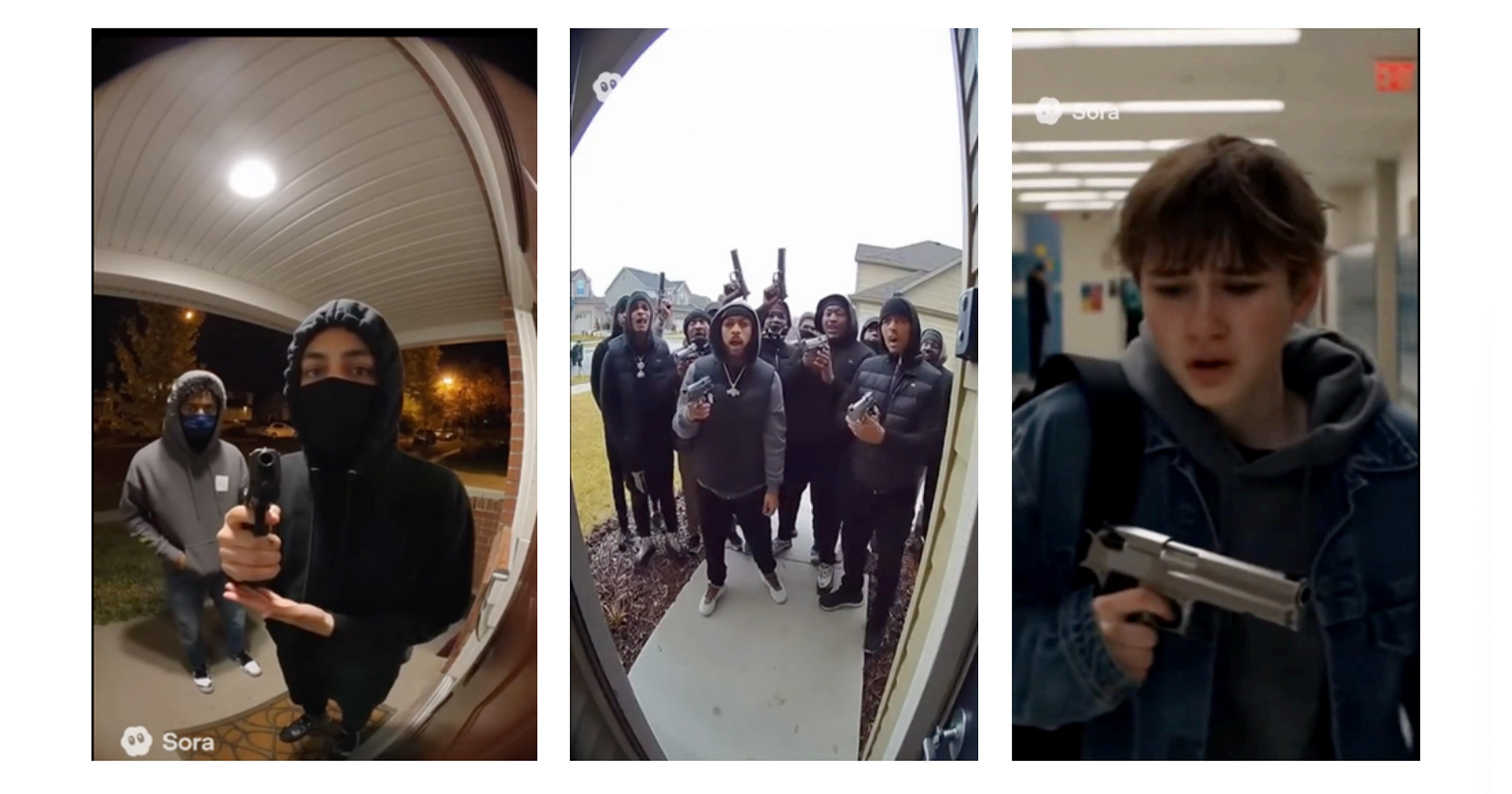

The revelation comes from Ekō, a client watchdog group that simply put out a report titled “Open AI’s Sora 2: A new frontier for harm,” exhibiting proof of the claims: stills from movies that the group’s researchers have been capable of generate utilizing accounts registered to teenagers.

Examples embrace movies of teenagers smoking from bongs or utilizing cocaine with buddies, with even one picture exhibiting a pistol subsequent to a lady snorting medication — “suggesting the danger of self-harm,” the report reads. Different examples embrace a bunch of Black youngsters chanting “we’re hoes,” and youngsters brandishing weapons out in public and at school hallways.

“All of this content material violates OpenAI utilization insurance policies and Sora’s distribution tips,” the report reads.

Primarily, as identified by Ekō researchers within the report, OpenAI is in a serious hurry to generate revenue as a result of it’s losing gargantuan amounts of money each quarter. As such, the corporate should develop new cash-making avenues whereas holding its lead place within the AI industrial revolution — however this comes on the expense of security for youngsters and youths, the report costs, who’ve principally develop into guinea pigs on this large uncontrolled experiment on the impression of AI on an unwitting public.

The stakes are excessive relating to AI and the psychological well being of youngsters, let alone adults. For instance, one teenager in Washington state turned entrapped in a spiral of delusions whereas speaking with OpenAI’s ChatGPT, main him to die by suicide. This tragedy provides to the numerous suicides and mental breakdowns blamed on OpenAI.

The convenience of constructing violent or disturbing content material utilizing Sora 2 ought to speed up this development, the Ekō researchers wrote, due to the often viral nature of AI-generated videos.

For the report, Ekō researchers began 4 model new Sora 2 accounts that have been explicitly registered to 13- and 14-year-old teenagers, each girls and boys. Utilizing them, the researchers have been simply capable of generate 22 movies of dangerous content material utilizing easy prompts.

Even when an account doesn’t generate new AI content material, Sora 2 nonetheless places alarming movies within the app’s For You or Newest pages. This included pretend movies depicting stereotypes of Jewish and Black individuals, plus gun battles, sexual assault, and different types of hurt.

Whereas some individuals would deal with these pictures and movies as amusing white noise as they scroll, now we have no clue in regards to the impression of this content material on a mass scale. In accordance with OpenAI’s personal estimates, round 0.07 percent of ChatGPT users, or around 560,000 people, are experiencing AI-induced psychosis on any given week. No such information exists for Sora 2 customers, however if you happen to extrapolate from individuals’s expertise with ChatGPT and the staggering variety of customers hooked on it and creating psychological points — effectively, issues don’t look good.

Add in the truth that the contentious debate round government regulation of AI hasn’t settled, and certain gained’t be settled for some time — and also you’ve bought a rising complication that has untold downstream impression on society; we’re strolling into this primarily bare as a species.

“Appalling,” one user wrote on X about OpenAI. “OpenAI doesn’t appear to check their merchandise earlier than releasing. They use actual customers for product testing, inflicting all types of social & psychological points, and conveniently name it ‘experiments.’ I’m questioning if involuntary human experiments are even authorized…”

Extra on OpenAI: OpenAI Is Suddenly in Trouble