Have you ever ever had the expertise of rereading a sentence a number of occasions solely to comprehend you continue to don’t perceive it? As taught to scores of incoming school freshmen, once you understand you’re spinning your wheels, it’s time to alter your strategy.

This course of, turning into conscious of one thing not working after which altering what you’re doing, is the essence of metacognition, or serious about pondering.

It’s your mind monitoring its own thinking, recognizing an issue, and controlling or adjusting your strategy. In reality, metacognition is prime to human intelligence and, until recently, has been understudied in artificial intelligence systems.

My colleagues Charles Courchaine, Hefei Qiu, and Joshua Iacoboni and I are working to alter that. We’ve developed a mathematical framework designed to permit generative AI systems, particularly giant language fashions like ChatGPT or Claude, to watch and regulate their very own inner “cognitive” processes. In some sense, you may consider it as giving generative AI an internal monologue, a approach to assess its personal confidence, detect confusion, and resolve when to think harder about an issue.

Why Machines Want Self-Consciousness

Right now’s generative AI methods are remarkably succesful however essentially unaware. They generate responses without genuinely knowing how confident or confused their response could be, whether or not it incorporates conflicting data, or whether or not an issue deserves additional consideration. This limitation turns into essential when generative AI’s inability to recognize its own uncertainty can have critical penalties, notably in high-stakes purposes reminiscent of medical analysis, monetary recommendation, and autonomous vehicle decision-making.

For instance, contemplate a medical generative AI system analyzing signs. It’d confidently counsel a analysis with none mechanism to acknowledge conditions the place it could be more appropriate to pause and reflect, like “These signs contradict one another” or “That is uncommon, I ought to suppose extra fastidiously.”

Growing such a capability would require metacognition, which entails both the ability to monitor one’s personal reasoning via self-awareness and to regulate the response via self-regulation.

Inspired by neurobiology, our framework goals to provide generative AI a semblance of those capabilities through the use of what we name a metacognitive state vector, which is basically a quantified measure of the generative AI’s inner “cognitive” state across five dimensions.

5 Dimensions of Machine Self-Consciousness

A method to consider these five dimensions is to think about giving a generative AI system 5 completely different sensors for its personal pondering.

We quantify every of those ideas inside an general mathematical framework to create the metacognitive state vector and use it to regulate ensembles of huge language fashions. In essence, the metacognitive state vector converts a big language mannequin’s qualitative self-assessments into quantitative indicators that it may use to regulate its responses.

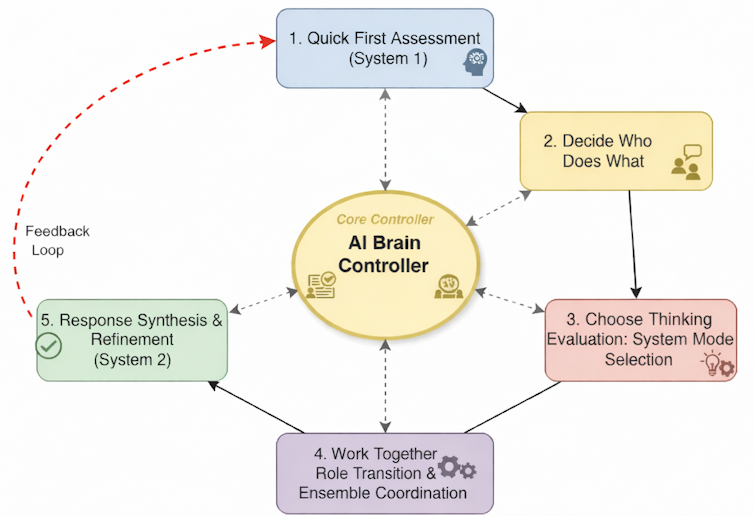

For instance, when a big language mannequin’s confidence in a response drops beneath a sure threshold or the conflicts within the response exceed some acceptable ranges, it would shift from quick, intuitive processing to sluggish, deliberative reasoning. That is analogous to what psychologists name System 1 and System 2 pondering in people.

This conceptual diagram reveals the essential thought for giving a set of huge language fashions an consciousness of the state of its processing. Ricky J. Sethi

Conducting an Orchestra

Think about a big language mannequin ensemble as an orchestra the place every musician—a person giant language mannequin—is available in at sure occasions primarily based on the cues acquired from the conductor. The metacognitive state vector acts because the conductor’s consciousness, continuously monitoring whether or not the orchestra is in concord, whether or not somebody is out of tune, or whether or not a very tough passage requires additional consideration.

When performing a well-recognized, well-rehearsed piece, like a easy people melody, the orchestra simply performs in fast, environment friendly unison with minimal coordination wanted. That is the System 1 mode. Every musician is aware of their half, the harmonies are simple, and the ensemble operates nearly mechanically.

However when the orchestra encounters a posh jazz composition with conflicting time signatures, dissonant harmonies, or sections requiring improvisation, the musicians want larger coordination. The conductor directs the musicians to shift roles: Some turn out to be part leaders, others present rhythmic anchoring, and soloists emerge for particular passages.

That is the type of system we’re hoping to create in a computational context by implementing our framework, orchestrating ensembles of huge language fashions. The metacognitive state vector informs a management system that acts because the conductor, telling it to change modes to System 2. It could possibly then inform every giant language mannequin to imagine completely different roles—for instance, critic or knowledgeable—and coordinate their advanced interactions primarily based on the metacognitive evaluation of the scenario.

Influence and Transparency

The implications lengthen far past making generative AI barely smarter. In well being care, a metacognitive generative AI system might acknowledge when signs don’t match typical patterns and escalate the issue to human consultants somewhat than risking misdiagnosis. In schooling, it might adapt instructing methods when it detects scholar confusion. In content material moderation, it might establish nuanced conditions requiring human judgment somewhat than making use of inflexible guidelines.

Maybe most significantly, our framework makes generative AI decision-making extra clear. As an alternative of a black box that merely produces solutions, we get methods that may clarify their confidence ranges, establish their uncertainties, and present why they selected explicit reasoning methods.

This interpretability and explainability is essential for constructing belief in AI methods, particularly in regulated industries or safety-critical purposes.

The Street Forward

Our framework doesn’t give machines consciousness or true self-awareness within the human sense. As an alternative, our hope is to offer a computational structure for allocating sources and bettering responses that additionally serves as a primary step towards extra refined approaches for full synthetic metacognition.

The next phase in our work entails validating the framework with intensive testing, measuring how metacognitive monitoring improves efficiency throughout numerous duties, and increasing the framework to begin reasoning about reasoning, or metareasoning. We’re notably interested by eventualities the place recognizing uncertainty is essential, reminiscent of in medical diagnoses, authorized reasoning, and producing scientific hypotheses.

Our final imaginative and prescient is generative AI methods that don’t simply course of data however perceive their cognitive limitations and strengths. This implies methods that know when to be assured and when to be cautious, when to suppose quick and when to decelerate, and once they’re certified to reply and when they need to defer to others.

This text is republished from The Conversation below a Artistic Commons license. Learn the original article.