A research predicts that dangerous actors will use AI day by day by mid-2024 to unfold poisonous content material into mainstream on-line communities, doubtlessly impacting elections. Credit score: SciTechDaily.com

A research forecasts that by mid-2024, dangerous actors are anticipated to more and more make the most of AI of their day by day actions. The analysis, performed by Neil F. Johnson and his crew, entails an exploration of on-line communities related to hatred. Their methodology consists of trying to find terminology listed within the Anti-Defamation League Hate Symbols Database, in addition to figuring out teams flagged by the Southern Poverty Regulation Heart.

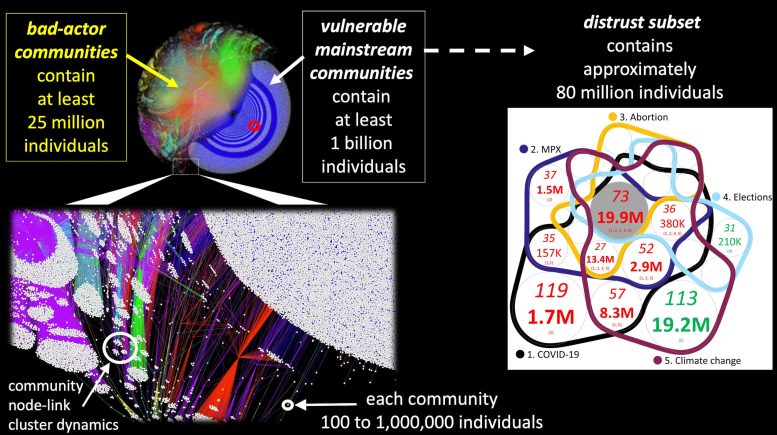

From an preliminary record of “bad-actor” communities discovered utilizing these phrases, the authors assess communities linked to by the bad-actor communities. The authors repeat this process to generate a community map of bad-actor communities—and the extra mainstream on-line teams they hyperlink to.

Mainstream Communities Categorized as “Mistrust Subset”

Some mainstream communities are categorized as belonging to a “mistrust subset” in the event that they host vital dialogue of COVID-19, MPX, abortion, elections, or local weather change. Utilizing the ensuing map of the present on-line bad-actor “battlefield,” which incorporates greater than 1 billion people, the authors undertaking how AI could also be utilized by these dangerous actors.

The bad-actor–vulnerable-mainstream ecosystem (left panel). It contains interlinked bad-actor communities (coloured nodes) and weak mainstream communities (white nodes, that are communities to which bad-actor communities have shaped a direct hyperlink). This empirical community is proven utilizing the ForceAtlas2 structure algorithm, which is spontaneous, therefore units of communities (nodes) seem nearer collectively after they share extra hyperlinks. Totally different colours correspond to totally different platforms. Small crimson ring exhibits 2023 Texas shooter’s YouTube group as illustration. Proper panel exhibits Venn diagram of the subjects mentioned inside the mistrust subset. Every circle denotes a class of communities that debate a particular set of subjects, listed at backside. The medium measurement quantity is the variety of communities discussing that particular set of subjects, and the biggest quantity is the corresponding variety of people, e.g. grey circle exhibits that 19.9M people (73 communities) talk about all 5 subjects. Quantity is crimson if a majority are anti-vaccination; inexperienced if majority is impartial on vaccines. Solely areas with > 3% of complete communities are labeled. Anti-vaccination dominates. General, this determine exhibits how bad-actor-AI may rapidly obtain world attain and will additionally develop quickly by drawing in communities with present mistrust. Credit score: Johnson et al.

The authors predict that dangerous actors will more and more use AI to constantly push poisonous content material onto mainstream communities utilizing early iterations of AI instruments, as these applications have fewer filters designed to stop their utilization by dangerous actors and are freely obtainable applications sufficiently small to suit on a laptop computer.

AI-Powered Assaults Virtually Every day by Mid-2024

The authors predict that such bad-actor-AI assaults will happen virtually day by day by mid-2024—in time to have an effect on U.S. and different world elections. The authors emphasize that as AI continues to be new, their predictions are essentially speculative, however hope that their work will however function a place to begin for coverage discussions about managing the threats of bad-actor-AI.

Reference: “Controlling bad-actor-artificial intelligence exercise at scale throughout on-line battlefields” by Neil F Johnson, Richard Sear and Lucia Illari, 23 January 2024, PNAS Nexus.

DOI: 10.1093/pnasnexus/pgae004