Quantum computer systems could quickly deal with issues that stump right now’s highly effective supercomputers—even when riddled with errors.

Computation and accuracy go hand in hand. However a new collaboration between IBM and UC Berkeley confirmed that perfection isn’t essentially required for fixing difficult issues, from understanding the habits of magnetic supplies to modeling how neural networks behave or how data spreads throughout social networks.

The groups pitted IBM’s 127-qubit Eagle chip in opposition to supercomputers at Lawrence Berkeley Nationwide Lab and Purdue College for more and more complicated duties. With simpler calculations, Eagle matched the supercomputers’ outcomes each time—suggesting that even with noise, the quantum pc might generate correct responses. However the place it shone was in its potential to tolerate scale, returning outcomes which can be—in concept—way more correct than what’s attainable right now with state-of-the-art silicon computer chips.

On the coronary heart is a post-processing method that decreases noise. Just like taking a look at a big portray, the strategy ignores every brush stroke. Slightly, it focuses on small parts of the portray and captures the overall “gist” of the paintings.

The research, published in Nature, isn’t chasing quantum benefit, the speculation that quantum computer systems can remedy issues sooner than standard computer systems. Slightly, it exhibits that right now’s quantum computer systems, even when imperfect, could change into a part of scientific analysis—and maybe our lives—before anticipated. In different phrases, we’ve now entered the realm of quantum utility.

“The crux of the work is that we are able to now use all 127 of Eagle’s qubits to run a fairly sizable and deep circuit—and the numbers come out right,” said Dr. Kristan Temme, precept analysis workers member and supervisor for the Principle of Quantum Algorithms group at IBM Quantum.

The Error Terror

The Achilles heel of quantum computer systems is their errors.

Just like traditional silicon-based pc chips—these operating in your cellphone or laptop computer—quantum computer systems use packets of knowledge known as bits as the essential methodology of calculation. What’s completely different is that in classical computer systems, bits signify 1 or 0. However because of quantum quirks, the quantum equal of bits, qubits, exist in a state of flux, with an opportunity of touchdown in both place.

This weirdness, together with different attributes, makes it attainable for quantum computer systems to concurrently compute a number of complicated calculations—basically, everything, everywhere, all at once (wink)—making them, in concept, way more environment friendly than right now’s silicon chips.

Proving the thought is tougher.

“The race to point out that these processors can outperform their classical counterparts is a troublesome one,” said Drs. Göran Wendin and Jonas Bylander on the Chalmers College of Know-how in Sweden, who weren’t concerned within the research.

The principle trip-up? Errors.

Qubits are finicky issues, as are the methods wherein they work together with one another. Even minor modifications of their state or atmosphere can throw a calculation off observe. “Growing the complete potential of quantum computer systems requires gadgets that may right their very own errors,” stated Wendin and Bylander.

The fairy story ending is a fault-tolerant quantum pc. Right here, it’ll have 1000’s of high-quality qubits just like “excellent” ones used right now in simulated fashions, all managed by a self-correcting system.

That fantasy could also be many years off. However within the meantime, scientists have settled on an interim answer: error mitigation. The thought is straightforward: if we are able to’t eradicate noise, why not settle for it? Right here, the thought is to measure and tolerate errors whereas discovering strategies that compensate for quantum hiccups utilizing post-processing software program.

It’s a troublesome downside. One earlier methodology, dubbed “noisy intermediate-scale quantum computation,” can observe errors as they construct up and proper them earlier than they corrupt the computational job at hand. However the thought solely labored for quantum computer systems operating a couple of qubits—an answer that doesn’t work for fixing helpful issues, as a result of they’ll doubtless require 1000’s of qubits.

IBM Quantum had one other thought. Back in 2017, they revealed a guiding concept: if we are able to perceive the supply of noise within the quantum computing system, then we are able to eradicate its results.

The general thought is a bit unorthodox. Slightly than limiting noise, the crew intentionally enhanced noise in a quantum pc utilizing an analogous method that controls qubits. This makes it attainable to measure outcomes from a number of experiments injected with various ranges of noise, and develop methods to counteract its adverse results.

Again to Zero

On this research, the crew generated a mannequin of how noise behaves within the system. With this “noise atlas,” they may higher manipulate, amplify, and eradicate the undesirable indicators in a predicable method.

Utilizing post-processing software program known as Zero Noise Extrapolation (ZNE), they extrapolated the measured “noise atlas” to a system with out noise—like digitally erasing background hums from a recorded soundtrack.

As a proof of idea, the crew turned to a traditional mathematical mannequin used to seize complicated methods in physics, neuroscience, and social dynamics. Known as the 2D Ising mannequin, it was initially developed almost a century in the past to review magnetic supplies.

Magnetic objects are a bit like qubits. Think about a compass. They have a tendency to level north, however can land in any place relying on the place you’re—figuring out their final state.

The Ising mannequin mimics a lattice of compasses, wherein every one’s spin influences its neighbor’s. Every spin has two states: up or down. Though initially used to explain magnetic properties, the Ising mannequin is now extensively used for simulating the habits of complicated methods, resembling organic neural networks and social dynamics. It additionally helps with cleansing up noise in picture evaluation and bolsters pc imaginative and prescient.

The mannequin is ideal for difficult quantum computer systems due to its scale. Because the variety of “compasses” will increase, the system’s complexity rises exponentially and rapidly outgrows the potential of right now’s supercomputers. This makes it an ideal take a look at for pitting quantum and classical computer systems mano a mano.

An preliminary take a look at first centered on a small group of spins properly inside the supercomputers’ capabilities. The outcomes had been on the mark for each, offering a benchmark of the Eagle quantum processor’s efficiency with the error mitigation software program. That’s, even with errors, the quantum processor offered correct outcomes just like these from state-of-the-art supercomputers.

For the subsequent checks, the crew stepped up the complexity of the calculations, finally using all of Eagle’s 127 qubits and over 60 completely different steps. At first, the supercomputers, armed with tricks to calculate actual solutions, saved up with the quantum pc, pumping out surprisingly comparable outcomes.

“The extent of settlement between the quantum and classical computations on such massive issues was fairly shocking to me personally,” stated research creator Dr. Andrew Eddins at IBM Quantum.

Because the complexity elevated, nonetheless, traditional approximation strategies started to falter. The breaking level occurred when the crew dialed up the qubits to 68 to mannequin the issue. From there, Eagle was in a position to scale as much as its total 127 qubits, producing solutions past the potential of the supercomputers.

It’s unimaginable to certify that the outcomes are fully correct. Nonetheless, as a result of Eagle’s efficiency matched outcomes from the supercomputers—as much as the purpose the latter might now not maintain up—the earlier trials counsel the brand new solutions are doubtless right.

What’s Subsequent?

The research continues to be a proof of idea.

Though it exhibits that the post-processing software program, ZNE, can mitigate errors in a 127-qubit system, it’s nonetheless unclear if the answer can scale up. With IBM’s 1,121-qubit Condor chip set to release this 12 months—and “utility-scale processors” with as much as 4,158 qubits within the pipeline—the error-mitigating technique may have additional testing.

General, the strategy’s power is in its scale, not its pace. The quantum speed-up was about two to a few instances sooner than classical computer systems. The technique additionally makes use of a short-term pragmatic strategy by pursuing methods that decrease errors—versus correcting them altogether—as an interim answer to start using these unusual however highly effective machines.

These strategies “will drive the event of system expertise, management methods, and software program by offering functions that might supply helpful quantum benefit past quantum-computing analysis—and pave the best way for really fault-tolerant quantum computing,” stated Wendin and Bylander. Though nonetheless of their early days, they “herald additional alternatives for quantum processors to emulate bodily methods which can be far past the attain of standard computer systems.”

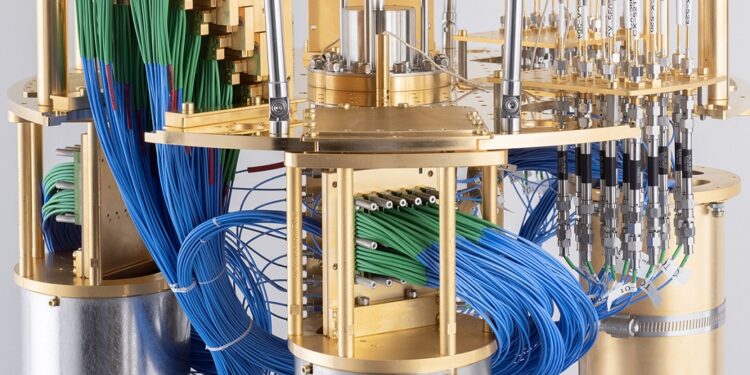

Picture Credit score: IBM