People are social animals, however there look like exhausting limits to the variety of relationships we are able to preserve directly. New analysis suggests AI could also be able to collaborating in a lot bigger teams.

Within the Nineties, British anthropologist Robin Dunbar instructed that almost all people can solely preserve social teams of roughly 150 individuals. Whereas there’s appreciable debate in regards to the reliability of the strategies Dunbar used to achieve this quantity, it has grow to be a preferred benchmark for the optimum measurement of human teams in enterprise administration.

There may be rising curiosity in utilizing teams of AIs to resolve duties in numerous settings, which prompted researchers to ask whether or not in the present day’s large language models (LLMs) are equally constrained on the subject of the variety of people that may successfully work collectively. They discovered essentially the most succesful fashions may cooperate in teams of no less than 1,000, an order of magnitude greater than people.

“I used to be very stunned,” Giordano De Marzo on the College of Konstanz, Germany, told New Scientist. “Mainly, with the computational sources we now have and the cash we now have, we [were able to] simulate as much as hundreds of brokers, and there was no signal in any respect of a breaking of the power to type a neighborhood.”

To check the social capabilities of LLMs the researchers spun up many situations of the identical mannequin and assigned every one a random opinion. Then, one after the other, the researchers confirmed every copy the opinions of all its friends and requested if it needed to replace its personal opinion.

The staff discovered that the probability of the group reaching consensus was straight associated to the facility of the underlying mannequin. Smaller or older fashions, like Claude 3 Haiku and GPT-3.5 Turbo, have been unable to come back to settlement, whereas the 70-billion-parameter model of Llama 3 reached settlement if there have been not more than 50 situations.

However for GPT-4 Turbo, essentially the most highly effective mannequin the researchers examined, teams of as much as 1,000 copies may obtain consensus. The researchers didn’t check bigger teams as a consequence of restricted computational sources.

The results recommend that bigger AI fashions may doubtlessly collaborate at scales far past people, Dunbar advised New Scientist. “It actually seems promising that they might get collectively a bunch of various opinions and are available to a consensus a lot sooner than we may do, and with an even bigger group of opinions,” he stated.

The outcomes add to a rising physique of analysis into “multi-agent systems” that has discovered teams of AIs working collectively may do higher at quite a lot of math and language duties. Nevertheless, even when these fashions can successfully function in very giant teams, the computational price of working so many situations might make the thought impractical.

Additionally, agreeing on one thing doesn’t imply it’s proper, Philip Feldman on the College of Maryland, advised New Scientist. It maybe shouldn’t be shocking that an identical copies of a mannequin rapidly type a consensus, however there’s likelihood that the answer they choose received’t be optimum.

Nevertheless, it does appear intuitive that AI brokers are more likely to be able to bigger scale collaboration than people, as they’re unconstrained by organic bottlenecks on pace and knowledge bandwidth. Whether or not present fashions are sensible sufficient to benefit from that’s unclear, however it appears totally potential that future generations of the expertise will have the ability to.

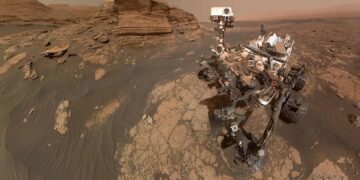

Picture Credit score: Ant Rozetsky / Unsplash